Building a Simple Artificial Neural Network in JavaScript

This article will discuss building a simple neural network using JavaScript. However, let’s first check what deep neural networks and artificial neural networks are.

Deep Neural Network and Artificial Neural Network

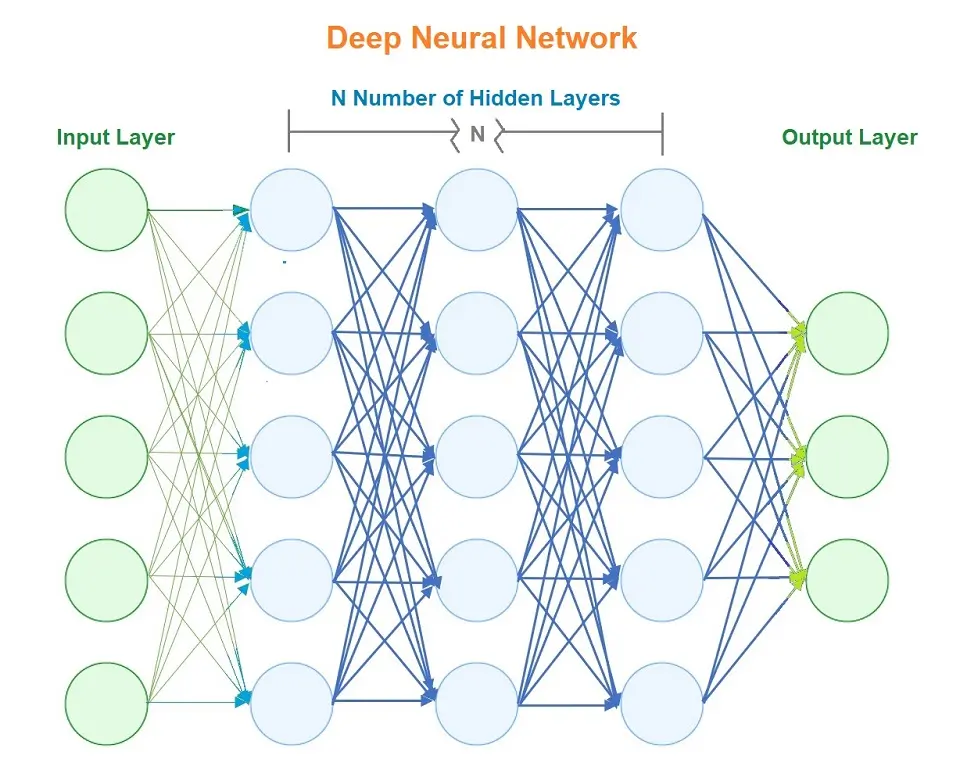

Artificial Neural Networks (ANNs) and Deep Neural Networks (DNNs) are related concepts, but they are different. The inspiration behind these artificial neural networks for machine learning and artificial intelligence is the biological neural networks that constitute animal brains. Here’s the distinction between the two:

Artificial Neural Networks (ANNs):

- ANNs are the foundation for neural networks in computer science and artificial intelligence.

- They consist of neurons (nodes or units) arranged in layers: an input layer, one or more hidden layers, and an output layer.

- ANNs can have a variable number of hidden layers and neurons, and the depth of an ANN is generally the number of layers between the input and output layers.

- They can model complex patterns and relationships within data for their use in tasks like classification, regression, and even playing games.

Deep Neural Networks (DNNs):

- DNNs are a subcategory of ANNs. They are essentially ANNs with many hidden layers, so they are termed “deep.”

- The “deep” in DNN refers to the depth of the network, which allows these networks to learn complex and high-level features from data.

- They have been at the forefront of many recent advances in AI, such as computer vision, natural language processing, and speech recognition, due to their ability to process and learn from vast amounts of data.

The key difference is the depth: while all DNNs are ANNs, not all ANNs are “deep.” Typically, when an ANN has two or more hidden layers, we can call it a Deep Neural Network or DNN.

The depth of these networks has led to the term “deep learning” to describe the learning process in neural networks with many layers. Deep learning has been responsible for many of the most advanced AI systems due to their ability to perform feature extraction across many levels and represent complex abstractions.

This article will look at the basis of a neural network, how it works, and how to build a simple neural network in JavaScript.

The Simplest Part of ANN is a Perceptron

A perceptron is a single layer of a neural network. Multiple layers of perceptron stacked on top of each other create a neural network.

Design of Artificial Neural Network

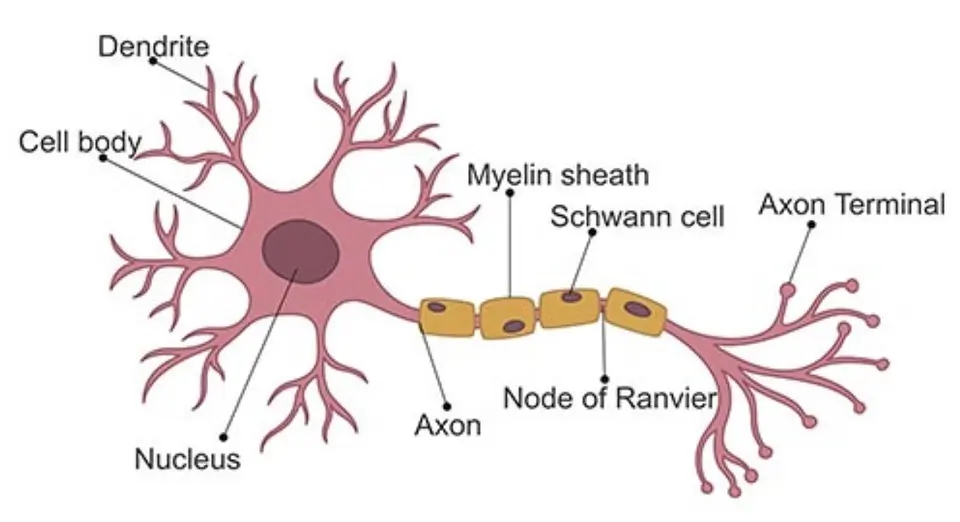

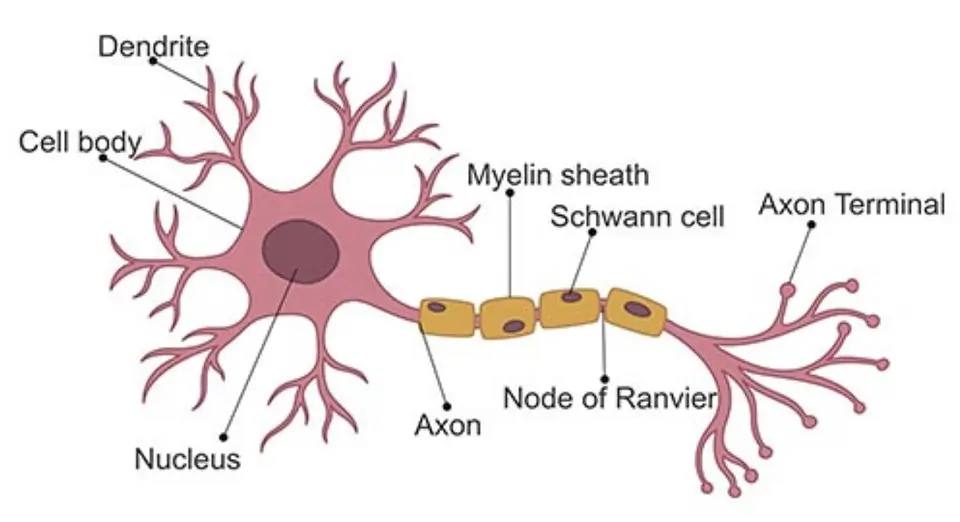

To understand the design of ANN, we have to look at the brain.

The neurons inspire the neural net design in the human body and how they process information. A simple node where information enters to be processed (dendrites), processed (Soma, the neuron body), and leaves the neuron through an output called an axon.

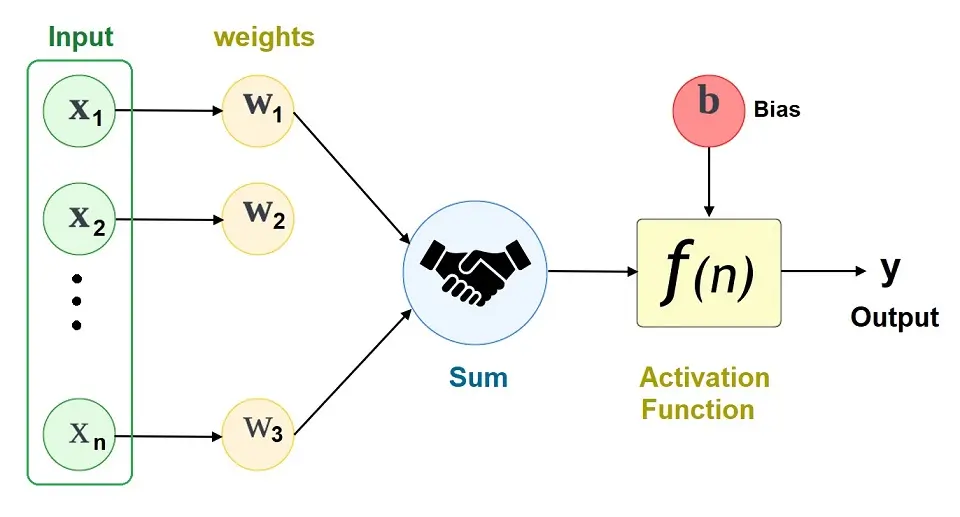

Now let’s look at the image of a perceptron

Looking at the Math for Artificial Neural Network

The math for an ANN is

output = activationFunction( (Weight * Input ) + bias)

Now, let’s look at how that translates to a neural network.

Here, we have some key terms like

- Inputs

- Weights

- Bias

- Activation functions

- Output

Input

The input is the data entering the neuron meant to be processed and passed on as output.

Weights and Biases

When a neural net layer receives data, it performs operations on it before passing it to the next layer. However, we do it by multiplying that data by the weights.

The weights in this context can be defined as the strength of the connection between two layers. They affect the output where small weights pass on smaller values and larger weights pass on larger values. The value from the product of the weights and values is important because smaller output values mean that the node may not fire to the next node.

Weights and biases usually have incorrect values initially. However, there is a continuous adjustment to these values over time by an optimization algorithm, e.g., gradient descent.

Biases are values added to the product of our input and weights. These values are a way of saying how less likely our model thinks that the layer is wrong. Small biases mean that the layer is making smaller assumptions, and larger bias values mean our layer is making many assumptions.

Activation functions

An activation function is added at the output of a neural network layer to perform operations on the output data. It lets the neural network learn complex patterns from the data. This helps the neuron determine what will be fired on to the next.

Types of activation

The following are the main types of activation functions that we use in artificial neural networks.

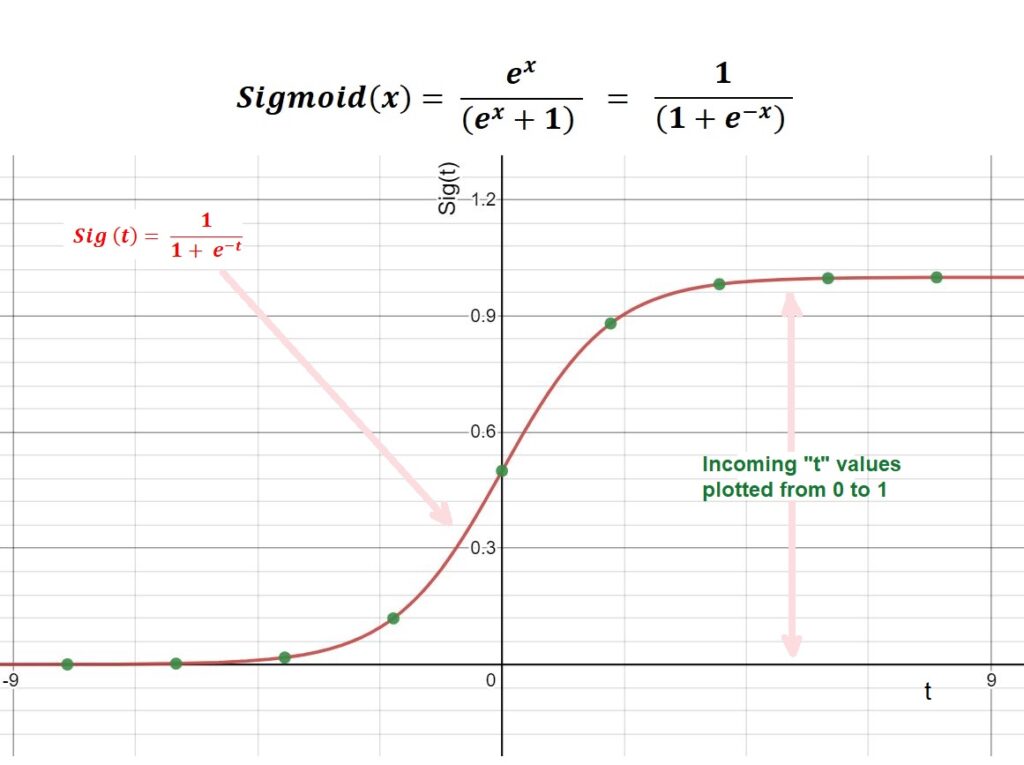

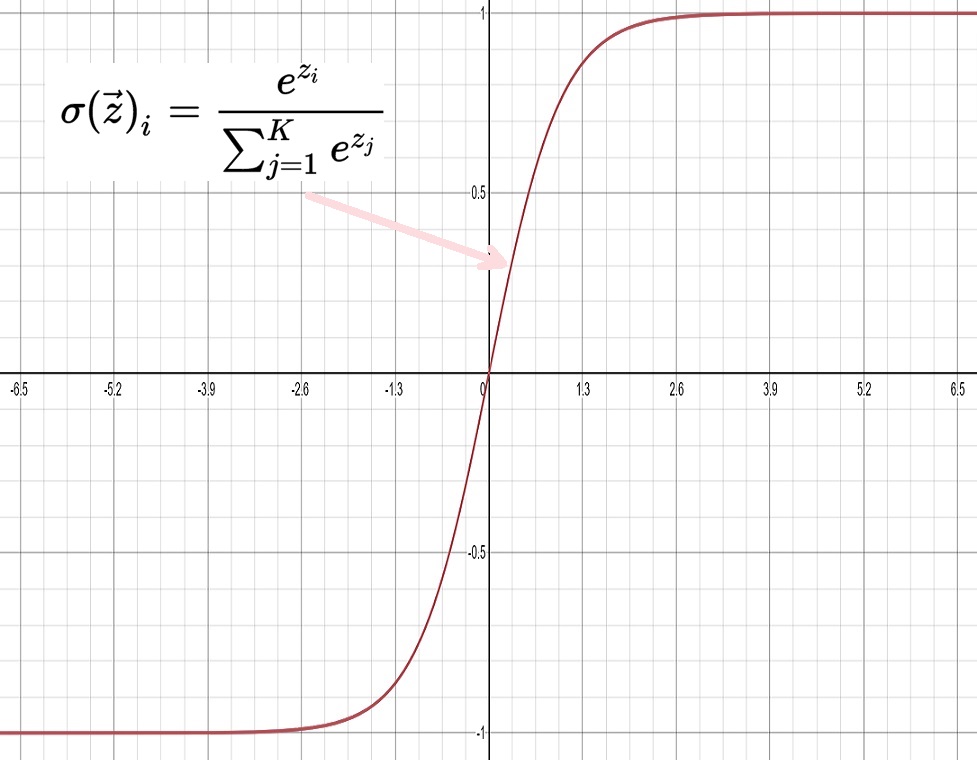

Sigmoid

This is one of the most used activation functions out there. It changes numbers to be between a range of 0 and 1. It is nonlinear.

Implementation

const sigmoid = (x) =>{

return 1 / (1 + Math.exp(-x));

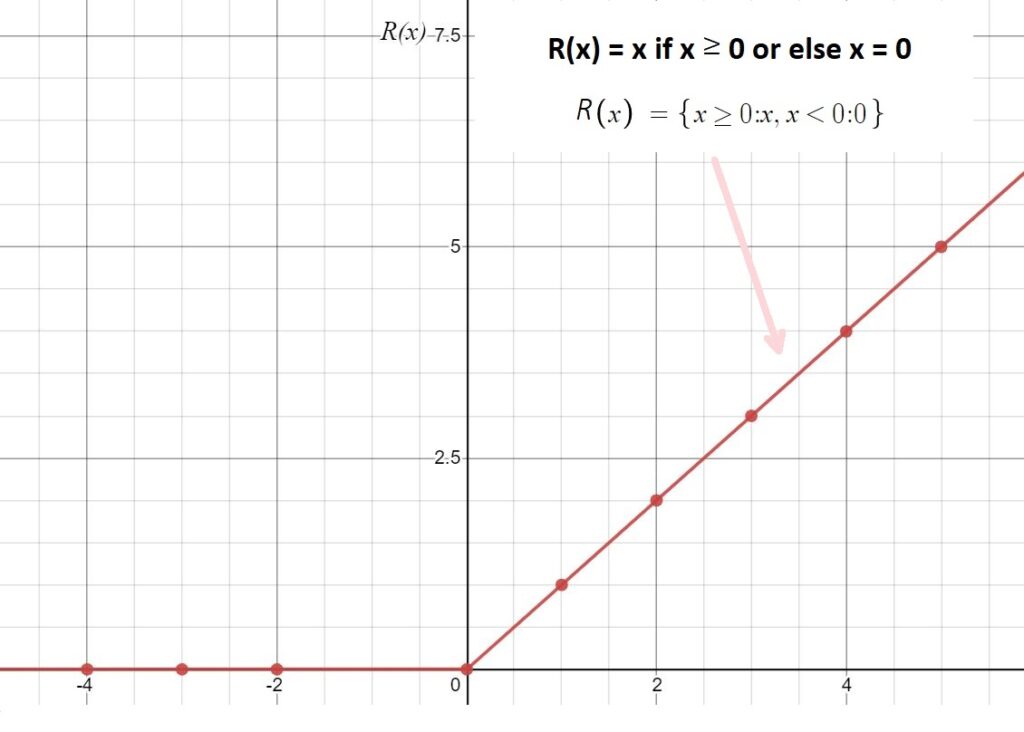

}Rectified Linear Unit (RELU)

The creation of RELU, or Rectified Linear Unit, was to solve problems of saturation of large numbers; it is linear and turns values less than 0 to zero.

Implementation

const relu = (x) =>{

return 1 / ( Math.max(0 , -x));

}

Softmax

Softmax is another amazing activation function, but it differs from the rest. It computes the probability distribution of items, so it being at your last node, we can assign probability value for defined classes if we map an output node to a defined class.

Advantages of an activation function

- Keeps the values restricted to a certain range. An example would be a sigmoid that keeps its data between a range of 0 to 1 and prevents the output of the nodes from potentially reaching infinity (because, honestly, it actually can if you make mistakes).

- We can add non-linearity into the neural network; an example would be data with three dimensions that can potentially not be linear.

- It helps decide if the data from the layer should fire or not.

The code implementation

Let’s look at the code:

Matrix.js

This handles the matrix multiplication of our neural net.

class Matrix {

// constructor(inputLayer,outputLayer){

// this.data = Array.from(Array(inputLayer.length), () => new Array(outputLayer.length));

// console.log(this.data)

// }

constructor(data) {

const {outputLayer, inputLayer,init} = data

if(init){

this.data = init

this.shape = [init.length,Array.isArray(init[0])?init[0].length: 1 ]

}

else{

this.data = Array.from(Array(inputLayer), () => new Array(outputLayer).fill(0));

this.shape = [inputLayer, outputLayer]

}

}

multiply(matrix) {

///simple check to see if we can multiply this guys

if (!matrix instanceof Matrix) {

throw new Error('This is no Matrix')

}

if (this.shape[1] !== matrix.shape[0]) {

throw new Error(`Can not multiply this two matrices. the object:${JSON.stringify(this.shape)} multipleidBy:${JSON.stringify(matrix.shape)}`)

}

const newMatrice = new Matrix({

inputLayer : this.shape[0],

outputLayer: matrix.shape[1]

})

for (let i = 0; i < newMatrice.shape[0]; i++) {

for (let j = 0; j < newMatrice.shape[1]; j++) {

let sum = 0;

for (let k = 0; k < this.shape[1]; k++) {

sum += this.data[i][k] * matrix.data[k][j];

}

newMatrice.data[i][j] = sum;

}

}

return newMatrice

}

add(matrix) {

const newMatrice = new Matrix({

inputLayer : this.shape[0],

outputLayer: matrix.shape[1]

})

if (!(matrix instanceof Matrix)) {

for (let i = 0; i < this.shape[0]; i++)

for (let j = 0; j < this.shape[1]; j++) {

newMatrice.data[i][j] = this.data[i][j] + matrix;

}

}

else {

for (let i = 0; i < matrix.shape[0]; i++) {

for (let j = 0; j < this.shape[1]; j++) {

newMatrice.data[i][j] = matrix.data[i][j] + this.data[i][j];

}

}

}

this.data = newMatrice.data

this.shape = newMatrice.shape

return newMatrice

}

subtract(matrix) {

const newMatrice = new Matrix(this.shape[0], this.shape[1])

if (!(matrix instanceof Matrix)) {

for (let i = 0; i < this.shape[0]; i++)

for (let j = 0; j < this.shape[1]; j++) {

newMatrice.data[i][j] = this.data[i][j] - matrix;

}

}

else {

for (let i = 0; i < matrix.shape[0]; i++) {

for (let j = 0; j < this.shape[1]; j++) {

newMatrice.data[i][j] = matrix.data[i][j] - this.data[i][j];

}

}

}

return newMatrice

}

map(func) {

// Apply a function to every element of matrix

for (let i = 0; i < this.shape[0]; i++) {

for (let j = 0; j < this.shape[1]; j++) {

let val = this.data[i][j];

this.data[i][j] = func(val);

}

}

}

randomize(){

for(let i = 0; i < this.shape[0]; i++)

for(let j = 0; j < this.shape[1]; j++)

this.data[i][j] = (Math.random()*2) - 1; //between -1 and 1

}

};

module.exports = MatrixNeuralNet.js:

const Matrix = require('./matrix.js')

class LayerLink {

constructor(prevNode_count, node_count) {

this.weights = new Matrix({ outputLayer: node_count, inputLayer: prevNode_count });

this.bias = new Matrix({ outputLayer: node_count, inputLayer: 1 });

this.weights.randomize()

this.bias.randomize()

}

updateWeights(weights) {

this.weights = weights;

}

getWeights() {

return this.weights;

}

getBias() {

return this.bias;

}

}

class NeuralNetwork {

constructor(layers, options) {

this.id = Math.random()

this.fitness = 0

this.weightsFlat = []

this.biasFlat =[]

if (layers.length < 2) {

console.error("Neural Network Needs Atleast 2 Layers To Work.");

return { layers: layers };

}

this.options = {

activation: function(x) {

return 1 / (1 + Math.exp(-x))

},

derivative: function(y) {

return (y * (1 - y));

},

relu:(x)=>{

return Math.max(0, x);

}

}

this.learning_rate = 0.1;

this.layerCount = layers.length - 1; // Ignoring Output Layer.

this.inputs = layers[0];

this.output_nodes = layers[layers.length - 1];

this.layerLink = [];

for (let i = 1, j = 0; j < (this.layerCount); i++ , j++) {

if (layers[i] <= 0) {

console.error("A Layer Needs To Have Atleast One Node (Neuron).");

return { layers: layers };

}

this.layerLink[j] = new LayerLink(layers[j], layers[i]); // Previous Layer Nodes & Current Layer Nodes

}

}

predict(input_array) {

if (input_array.length !== this.inputs) {

throw new Error('Sorry the input can not be evaluated')

}

let result = new Matrix({ init: [input_array] })

for (let i = 0; i < this.layerLink.length; i++) {

result = result.multiply(this.layerLink[i].getWeights())

result = result.add(this.layerLink[i].getBias())

// console.log('old===> ',i,result.data[0])

const newR = result.data[0].map(this.options.relu)

// console.log('new====> ',i,newR)

result.data = [newR]

}

return result.data[0]

}

}

module.exports = NeuralNetwork

Conclusion

In summary, this article has journeyed through the foundational elements constituting a simple artificial neural network. I explained how inputs are transformed through layers of interconnected neurons—each modulated by weights and biases—to produce a meaningful output.

We can not overstate the role of activation functions as the catalysts of non-linearity within this network, as they enable the model to capture complex patterns and make sophisticated predictions.

With the provided code, we’ve taken these concepts from theory to practice, illustrating the elegance of neural networks in processing information and making decisions.

As we’ve seen, even a simple artificial neural network can embody the intricate dance of data and computation, serving as a stepping stone toward more complex and deep neural architectures. This exploration lays the groundwork for deeper inquiry and application of neural networks in solving real-world problems, truly showcasing the blend of simplicity and power these models offer.

Somto Achu is a software engineer from the U.K. He has rich years of experience building applications for the web both as a front-end engineer and back-end developer. He also writes articles on what he finds interesting in tech.